Ollama on Emacs with Ellama

Are you interested in running a local AI companion within Emacs? If so, you're in the right place! In this article, we'll guide you through setting up an Ollama server to run Llama2, Code Llama, and other AI models. This way, you'll have the power to seamlessly integrate these models into your Emacs workflow.

How to Install Ollama

Installing Ollama on your system is a straightforward process.

To get started, visit https://ollama.ai/download and follow the provided instructions.

It's essential not to blindly execute commands and scripts. Instead, take the time to review the source code and ensure it aligns with your requirements. You can modify the code to suit your needs or make adjustments after installation.

For a quick installation via the command line, you can use the following command:

curl https://ollama.ai/install.sh | sh

This command not only installs Ollama but also sets it up as a service that runs by default on your system. While it consumes minimal system resources when idle, it's a good practice to inspect the script's source code, make any necessary modifications, and configure it according to your preferences.

You can find the current installation script's source code: Current ollama install script.

Once you've set up Ollama, you can easily initiate interactions like these:

ollama run codelamma "Write me a function in Javascript that outputs the fibonacci sequence"

Or if you prefer a command-line chat interface:

olama run codelamma

During the initial run, the codellama model will be downloaded. This model is just one of the many offered by the Ollama project, so you have the flexibility to choose your favorite model based on your preferences. Keep in mind that each model may have varying hardware requirements.

As of today, Ollama supports the following models, as indicated on their Github Page.

| Model | Parameters | Size |

| ------------------ | ---------- | ----- |

| Mistral | 7B | 4.1GB |

| Llama 2 | 7B | 3.8GB |

| Code Llama | 7B | 3.8GB |

| Llama 2 Uncensored | 7B | 3.8GB |

| Llama 2 13B | 13B | 7.3GB |

| Llama 2 70B | 70B | 39GB |

| Orca Mini | 3B | 1.9GB |

| Vicuna | 7B | 3.8GB |Please note that you should have at least 8 GB of RAM to run the 3B models, 16 GB for the 7B models, and 32 GB for the 13B models.

If you're interested in exploring more models, you can find a comprehensive library here.

For the purposes of this article, we'll be using the codellama model.

Installing Ellama

Ellama is a very nice assembled package by Sergey Kostyaev, built on the foundation of the llm library package. It offers a set of powerful functions designed to seamlessly integrate Emacs and Ollama, enhancing your workflow and productivity.

For a closer look at Ellama and to stay updated on the project's current status, you can visit the official repository on GitHub: https://github.com/s-kostyaev/ellama.

You can install it via package-install or simply set use-package

on your init.el, like:

(use-package ellama

:init

(setopt ellama-language "English")

(require 'llm-ollama)

(setopt ellama-provider

(make-llm-ollama

:chat-model "codellama" :embedding-model "codellama")))

You're almost there! To activate these new features, simply evaluate this region or reset your Emacs.

Once you've done this, you can use the M-x command to access a

wealth of handy functions for querying your local Ollama server

directly from within Emacs. This will greatly enhance your workflow

and make your interaction with Ollama more seamless and efficient.

Using Ellama

Commands:

- ellama-chat: Initiate a conversation with Ellama by entering prompts in an interactive buffer.

- ellama-ask (Alias for ellama-chat): Ask questions and converse with Ellama.

- ellama-ask-about: Ask Ellama about a selected region or the current buffer's content.

- ellama-translate: Request Ellama to translate a selected region or word at the cursor.

- ellama-define-word: Find definitions for the current word using Ellama.

- ellama-summarize: Generate a summary for a selected region or the current buffer.

- ellama-code-review: Review code in a selected region or the current buffer with Ellama.

- ellama-change: Modify text in a selected region or the current buffer according to a provided change.

- ellama-enhance-grammar-spelling: Improve grammar and spelling in the selected region or buffer using Ellama.

- ellama-enhance-wording: Enhance wording in the selected region or buffer.

- ellama-make-concise: Simplify and make the text in the selected region or buffer more concise.

- ellama-change-code: Change selected code according to a provided change.

- ellama-enhance-code: Enhance selected code according to a provided change.

- ellama-complete-code: Complete selected code according to a provided change.

- ellama-add-code: Add new code based on a description, generated with provided context.

- ellama-render: Convert selected text or the text in the current buffer to a specified format.

- ellama-make-list: Create a markdown list from the selected region or the current buffer.

- ellama-make-table: Generate a markdown table from the selected region or the current buffer.

- ellama-summarize-webpage: Summarize a webpage fetched from a URL using Ellama.

You can check the full documentation on the Ellama Github Page.

Some special Ellama customizations

While this project already offers fantastic functions for seamless integration of Ollama into Emacs, I discovered the need for some additional keybindings to further boost my productivity.

As a result, I've developed a customization that introduces two valuable features:

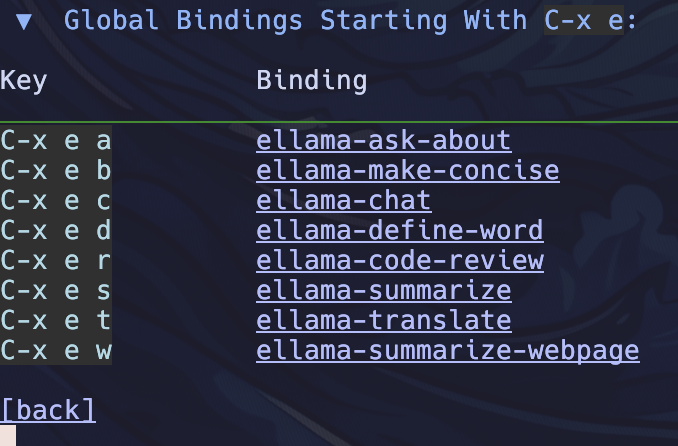

1. Global Keybinding Prefix: I've created a global keybinding prefix

C-x e, which can serve as a host for a wide range of commands

related to Ellama. To explore the full list of available commands,

you can simply use C-x e ? or take advantage of the which-key

package to receive hints for command completion.

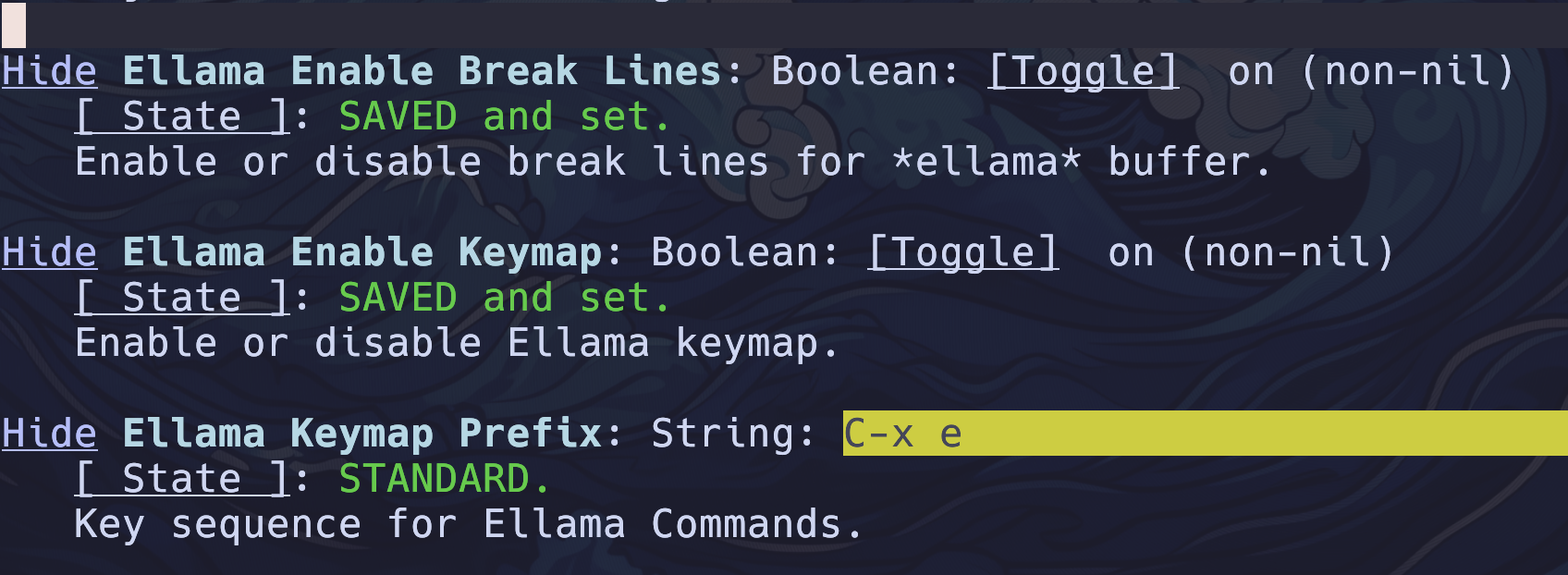

These features, including the toggle and the keybinding prefix, are

fully configurable through the customize-group ellama. This allows you

to tailor your Ellama integration in Emacs to suit your unique

workflow and preferences.

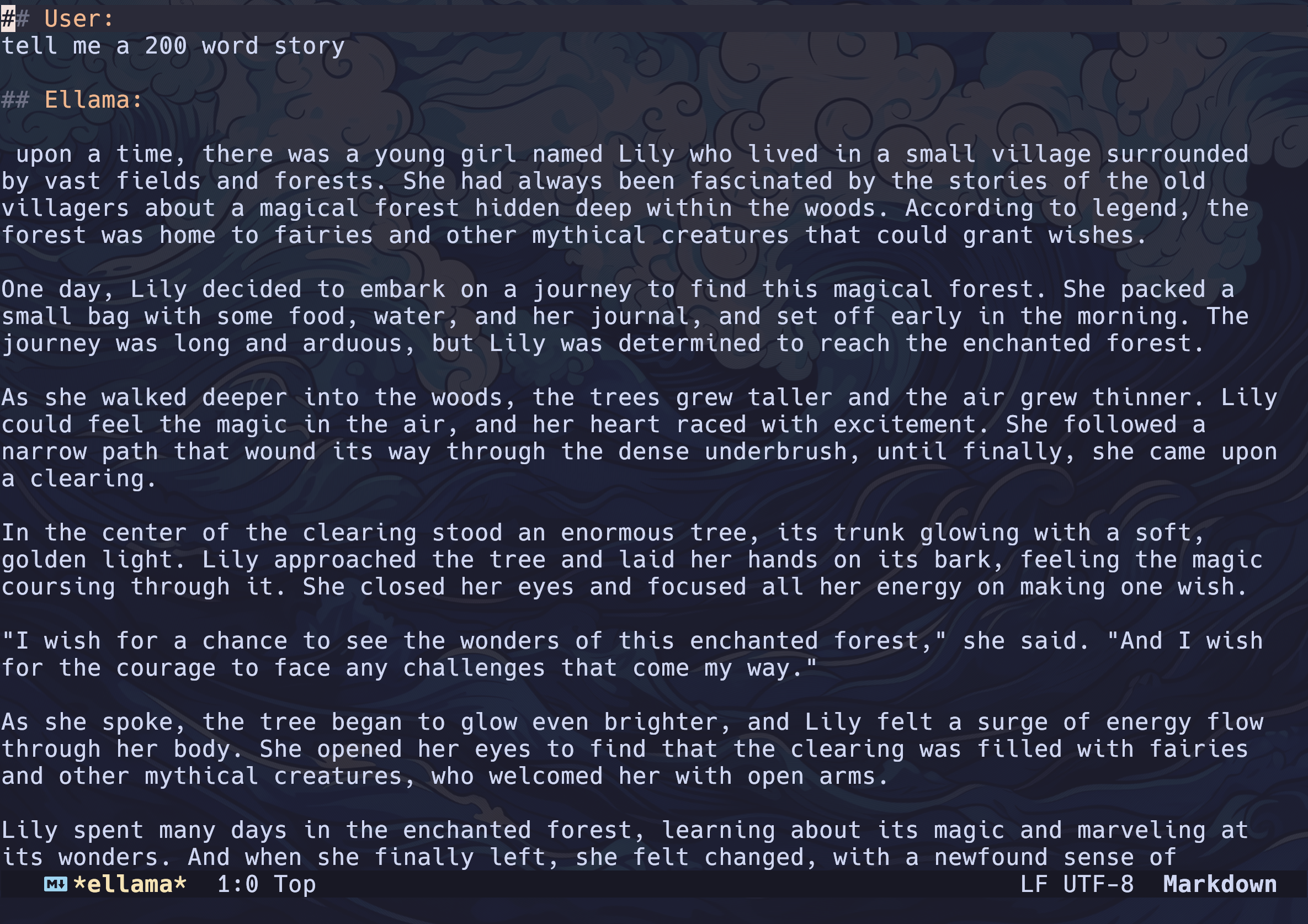

2. Visual-Line Mode for All *ellama* Buffers: I've introduced a

straightforward customization that automatically applies the

visual-line mode to all *ellama* buffers. This mode ensures that lines

are wrapped for improved readability.

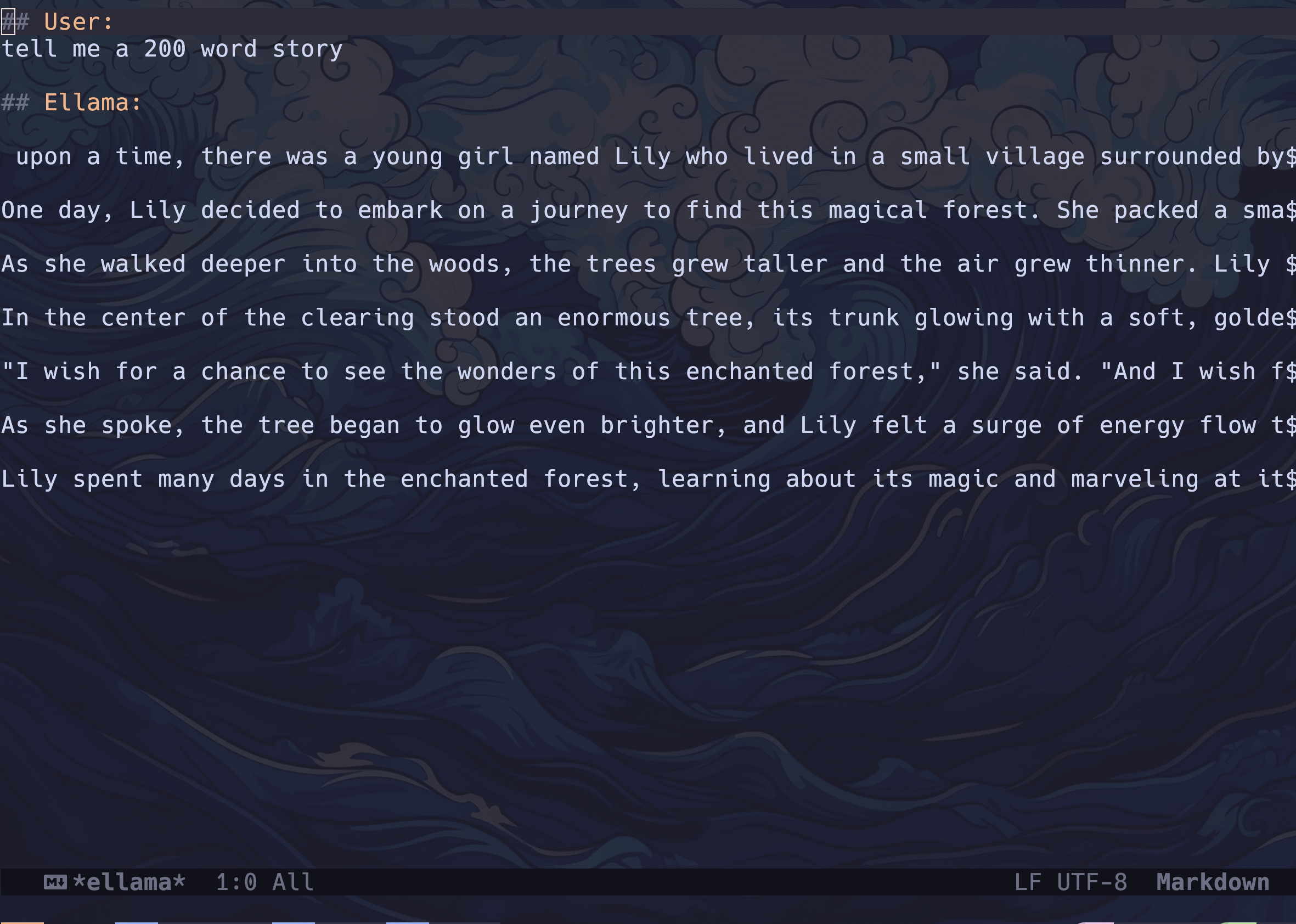

Additionally, I've included an option in customize-group ellama that

allows you to switch this feature on or off based on your

preference. As a result, a buffer like the one depicted below:

Will be displayed as follows:

This enhancement simplifies the reading experience within Ellama-related buffers, making it more user-friendly and adaptable to your needs.

As mentioned earlier, invoking customize-group ellama grants access to these

settings:

To make this functionality easily accessible, you can incorporate the following into your configuration:

(defun ellama-setup-keymap ()

"Set up the Ellama keymap and bindings."

(interactive)

(defvar ellama-keymap (make-sparse-keymap)

"Keymap for Ellama Commands")

(define-key global-map (kbd ellama-keymap-prefix) ellama-keymap)

(let ((key-commands

'(("a" ellama-ask-about "Ask about selected region")

("b" ellama-make-concise "Better text")

("c" ellama-chat "Chat with Ellama")

("d" ellama-define-word "Define selected word")

("r" ellama-code-review "Code-review selected code")

("s" ellama-summarize "Summarize selected text")

("t" ellama-translate "Translate the selected region")

("w" ellama-summarize-webpage "Summarize a web page"))))

(dolist (key-command key-commands)

(define-key ellama-keymap (kbd (car key-command)) (cadr key-command)))))

(defcustom ellama-keymap-prefix "C-x e"

"Key sequence for Ellama Commands."

:type 'string

:group 'ellama)

(defcustom ellama-enable-keymap t

"Enable or disable Ellama keymap."

:type 'boolean

:group 'ellama

:set (lambda (symbol value)

(set symbol value)

(if value

(ellama-setup-keymap)

;; If ellama-enable-keymap is nil, remove the key bindings

(define-key global-map (kbd ellama-keymap-prefix) nil))))

)

I proposed these enhancements in a PR. As you read this post, it might have been accepted and integrated into the core package, or perhaps it wasn't accepted, partially accepted, or it's already old news. Nevertheless, you have access to these functions for customizing as you see fit.

Dealing with hanging requests

If you, like me, sometimes find yourself running commands only to later realize that your AI is overthinking or stuck, it's valuable to know how to "cancel" a request.

While there wasn't an apparent cancel feature for AI requests, Emacs offers a way to promptly terminate its internal processes.

So, in case your request is taking too long or you suspect an error, and you wish to cancel it, you can follow these steps:

1. Execute M-x list-processes to display a list of all processes

currently running in Emacs.

2. Locate the line corresponding to the process you want to terminate.

3. Press d to kill that specific process.

This quick and effective method allows you to regain control and manage your processes efficiently within Emacs.

Conclusion

In conclusion, we've explored the exciting world of integrating Ollama with Emacs, enhancing our workflow and productivity. While the core package offers impressive functionality, we've taken customization a step further, introducing global keybindings and visual-line mode for ellama buffers.

These features not only simplify your interactions but also put you in the driver's seat when it comes to fine-tuning your Emacs-Ollama integration. Whether you're reading this with these enhancements already integrated, in anticipation of their acceptance, or as a source of inspiration for your own customizations, the tools are here for you to wield.